Ivy Aug 24, 2020 No Comments

We have learned several string operations in our previous blogs. Proceeding further we are going to work on some very interesting and useful concepts of text preprocessing using NLTK in Python. Let us first understand the text processing thought process by observing the following text to work on.

sample_text = '''A nuclear power plant is a thermal power station in which the heat source is a nuclear reactor. As is typical of thermal power stations, heat is usssded to generate steam that drives a steam turbine connected to aaaaa generator that produces electricity. As of 2018, the International Atomic Enertgy Agency reported ther were 450 nuclear power reactors in operation in 30 countries.'''

If you would want to do text preprocessing using NLTK in Python, what all steps pop up in our head? How do you think a text is processed? Whether a whole document is processed at once? Or it is broken down into individual words? Do you think the words like “of”, “the”, “to” add any value in our text analysis? Do these words provide us with any information? What can be done about these words? Can you spot some incorrectly spelled words? Would you like to correct them to improve your text analysis? Give these questions some thought. Now let us work on these questions one by one.

A machine will be able to process the text by breaking it down into smaller structures. Hence, in Text Analytics, we do have the Term Document Matrix (TDM) and TF-IDF techniques to process texts at the individual word level. We will deal with TDM, TF-IDF, and many more advanced NLP concepts in our future articles. For now, we are going to start our text preprocessing using NLTK in Python with Tokenization in this article.

Tokenization is the process of splitting textual data into smaller and more meaningful components called tokens. The most useful tokenization techniques include sentence and word tokenization. In this, we break down a text document (or corpus) into sentences and each sentence into words.

A text corpus can be a collection of paragraphs, where each paragraph can be further split into sentences. We call this sentence segmentation. We can split a sentence by specific delimiters like a period (.) or a newline character (\n) and sometimes even a semicolon (;). Next, we will explain the various techniques the NLTK library provides for sentence tokenization.

import nltk

nltk.download('punkt')

nltk.sent_tokenize(sample_text)

punkt_st = nltk.tokenize.PunktSentenceTokenizer() punkt_st.tokenize(sample_text)

SENTENCE_TOKENS_PATTERN = r'(?<!\w\.\w.)(?<![A-Z][a-z]\.)(?<![A-Z]\.)

(?<=\.|\?|\!)\s'

regex_st = nltk.tokenize.RegexpTokenizer(

pattern=SENTENCE_TOKENS_PATTERN,

gaps=True)

regex_st.tokenize(sample_text)

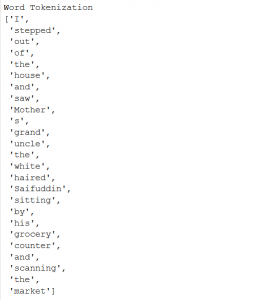

nltk.word_tokenize(sample_text)

We are going to use a different text for the next few word tokenizers.

text = "I stepped out of the house and saw Mother ’s grand-uncle, the white-haired Saifuddin, sitting by his grocery counter and scanning the market." nltk.TreebankWordTokenizer().tokenize(text)

regex_wt = nltk.RegexpTokenizer(pattern=r'\w+',gaps=False) regex_wt.tokenize(text)

whitespace_wt = nltk.WhitespaceTokenizer() words = whitespace_wt.tokenize(sample_text)

Words like a, an, the, of, it, you, you’re, and many more such words are classified as “stopwords”. This is because these words do not add any information in the text, unnecessarily adds to computation and text processing. So we will proceed to remove these words and hence the name stopwords.

In Python, we have got a couple of libraries that provide us with a list of stop words.

A list of stopwords NLTK library provides can be found using the below line of codes.

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

print(stopwords.words('english'))

So how does our text look like after we remove all these words from our text? Do follow the below code and the output to notice the words left.

from nltk.corpus import stopwords from nltk.tokenize import word_tokenize

stop_words = set(stopwords.words('english'))

word_tokens = word_tokenize(text)

filtered_sentence = [w for w in word_tokens if not w in stop_words]

print(word_tokens)

print(filtered_sentence)

If you might have observed, we converted the list of NLTK stopwords to a Set. That means we can apply all the various available set operations on our stopword variable stop_words. Now am sure you must have got an idea of what we are going to do to create our customized list of stopwords. Follow the codes below where we want to remove a couple of words from the text.

new_stopwords = {"Saifuddin",'grocery',"market}

stop_words.update(new_stopwords)

print("length of new stop_words",len(stop_words))

Now, if you filter your text with the new set of stopwords, you will get a new output list of words. Do give it a try.

In the next article, we are going to talk about other text pre-processing using NLTK in Python concepts like Spelling correction of a word, expanding contractions, and removing accented characters. These techniques will help us refine our text before we put them to machine learning models. Stay tuned. Happy Learning!!!

Leave a Reply